Welcome. Here is a free text!

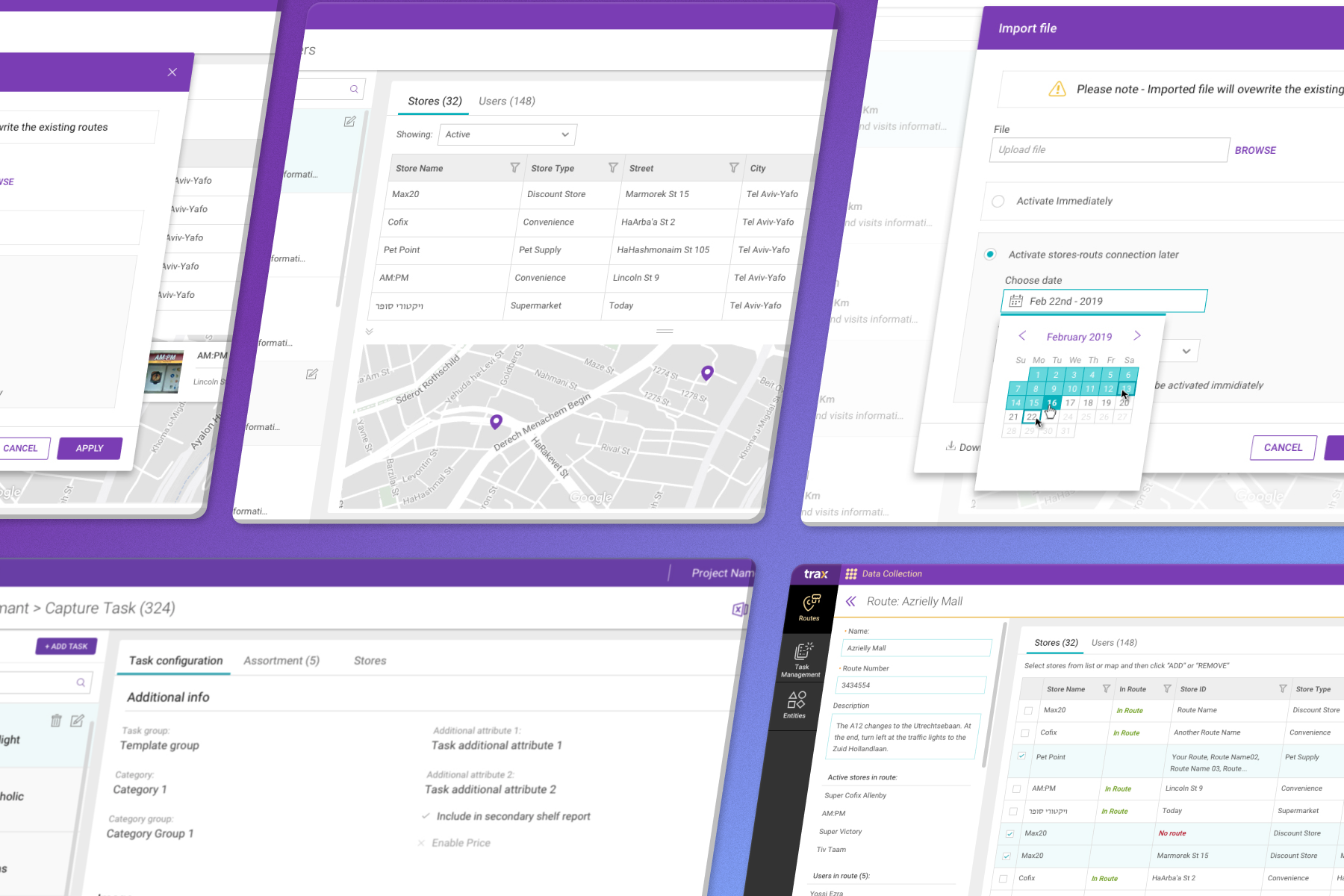

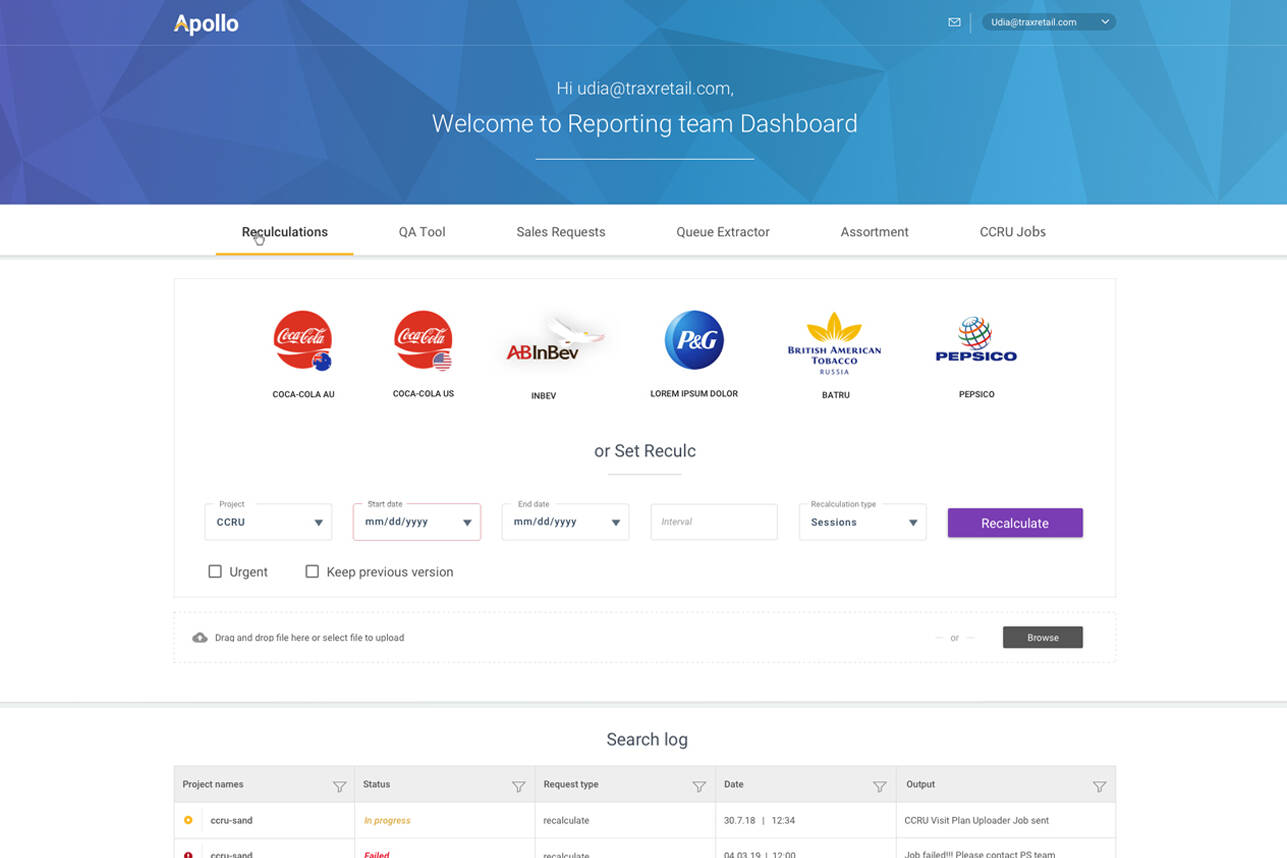

Trax Retail: Native Mobile Data Collection App

About Trax Image Recognition

Trax is a global leader in computer vision and retail image recognition, providing retailers and brands with real-time shelf intelligence. The company blends advanced AI with in-store execution tools to improve on-shelf availability, optimize product placement, and drive smarter retail operations.

About The Product

Native Mobile Data Collection App

Trax’s native mobile application streamlines and simplifies in-store data collection. Designed for field teams, the app enables fast, accurate capture of shelf-level information – from product placement and stock levels to planogram compliance – directly from store shelves. Its intuitive interface and real-time reporting capabilities help retailers monitor store conditions, identify opportunities, and act quickly to optimize merchandising and inventory management.

Challenges

Trax’s mobile collection app is the primary tool used by CPG customers to capture in-store shelf data for the image recognition engine. Because the engine is highly sophisticated, it requires users to follow specific capture guidelines to ensure accuracy.

The human factor creates a major challenge: field representatives each have their own habits and techniques when taking photos on their mobile devices. When the capture process isn’t executed correctly, the resulting data becomes incomplete or inaccurate, leading to unreliable shelf insights or, in some cases, capture failures altogether.

A further limitation is the lack of direct communication with end users, making it difficult to gather feedback and understand where the capture experience breaks down.

My Role in this Project

Image Capturing Process Guide – Vision, Ideation & Creation / Continuous Mobile Feature Development.

My role in Trax’s mobile department was to design the user experience for new features within the mobile collection app used by field teams in CPG organizations. The app supports day-to-day field operations, including in-store visits and task execution. I worked on a range of capabilities such as task completion flows, route planning, store information access, and navigation support.

The core function of the mobile app is to capture in-store shelf images for processing by the image recognition engine. However, the capture flow felt incomplete. The process relied on too many restrictions for the average user to remember and consistently follow, leading to errors and inconsistent data quality.

Research:

To pinpoint the root of the problem, I began with a quantitative usability test. I recruited eight employees from various departments and asked each of them to go through the image-capture flow. The outcomes were mixed. Those already familiar with the process completed it correctly, while those without prior knowledge executed it poorly, exposing inconsistency driven by a lack of guidance.

Next, I pushed for Mixpanel insights. I implemented a dedicated event to track where users dropped, canceled, or were forced out of the flow. The data showed that roughly 30 percent of users failed to complete the process, resulting in user frustration and unreliable reporting. The product team agreed that the capture flow had a structural issue.

I continued the research by examining similar patterns and solutions across domains: native Android/iOS camera apps, shopping cart flows, PDF scanning tools, and AR mobile game mechanics.

Solution:

The analysis showed that the core issue was the lack of real-time feedback and guidance. Users had no clear indication of whether they were capturing images correctly.

To address this, I designed an onboarding flow and an in-camera guidance that delivers immediate visual feedback on direction, alignment, blurriness, and distance. This ensures users understand exactly how to perform each capture.

Within the camera view, I introduced a tile-based status panel that reflects the quality of each captured image. When an image meets the required criteria, the tile appears as a positive confirmation. If the image is captured incorrectly, the tile turns red, signaling that it must be retaken.

Since one of the strict requirements is maintaining a shooting angle below 15 degrees, I added a dynamic angle indicator sourced from the device’s gyroscope. When the angle exceeds the threshold, the capture button becomes disabled and reactivates only when the device is positioned correctly.

The guide walks users step-by-step through both the capture process and the stitching sequence, reducing errors and improving overall data accuracy.

This feature reduced the failure percentage to about 6 percent.

Image capturing Process

Trax Mobile App screens

Trax Retail: Native Mobile Data Collection App

CompanyTrax RetailProductData Collection Mobile AppYear2020